When ChatGPT became publicly available in November 2022, it felt less like a product launch and more like a seismic event. Millions of users joined within days, businesses scrambled to understand the implications, and a wave of investment reshaped the technology industry. To many, it seemed as though a powerful, transformative new form of intelligence had appeared overnight.

Yet, this "overnight success" was decades in the making. The seemingly sudden arrival of accessible, powerful AI was not a spontaneous invention but the brilliant culmination of a long, methodical journey. It is the story of compounding innovation, where each generation of researchers stood on the shoulders of those who came before, solving one fundamental problem after another. From the early days of brittle, rule-based systems to the pivotal shift toward learning from data, and from the architectural breakthroughs that finally allowed computers to understand language in sequence to the scaling laws that unlocked entirely new capabilities, each step was a necessary part of the story. Understanding this history offers a powerful strategic lens – a way to see beyond the hype, recognize the patterns of real innovation, and build a clear-eyed strategy for the future.

Understanding the AI Landscape Beyond Chatbots

The sudden arrival of ChatGPT in late 2022 triggered a global conversation that often equates Artificial Intelligence with generative, conversational chatbots. While these large language models represent a monumental achievement, they are just one branch of a vast and diverse scientific discipline. Appreciating the journey that led to this moment means looking beyond the headlines to the foundational technologies that made it possible. This history reveals clear patterns of innovation that can help business leaders identify genuine opportunities and see how these technologies might bring value to their own operations.

The Many Disciplines of AI

Artificial intelligence encompasses several major branches, each with distinct goals and methods, and each capable of solving unique classes of problems. Among them we can find:

Natural Language Processing

This is the domain of conversational AI. Natural Language Processing focuses on the complex challenge of enabling computers to understand, interpret, and generate human language. The goal of NLP is to bridge the communication gap between humans and machines, with applications ranging from machine translation and sentiment analysis to the complex dialogue systems that now reshape everything from customer service to content creation and scientific research.

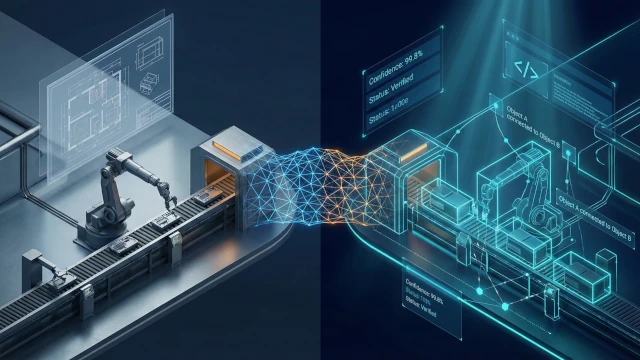

Computer Vision

Computer Vision gives machines the ability to "see" by interpreting and processing information from images and videos. This technology is the driving force behind practical applications like facial recognition, quality control on manufacturing lines, medical image analysis, and the perception systems in autonomous vehicles.

Robotics and Reinforcement Learning

While often linked, these are distinct fields. Robotics integrates AI with mechanical engineering to create agents that can physically interact with the world. Reinforcement Learning is a training paradigm that teaches an AI to make optimal decisions by learning from rewards and consequences, famously mastering complex strategic games like Go and chess.

Machine Learning as the Foundational Engine

Beneath most of these modern AI disciplines lies the foundational engine of machine learning. It represents a fundamental strategic shift away from the early days of AI, which were dominated by systems explicitly programmed with vast sets of rigid, human-coded rules. These early systems relied on intricate webs of "if-then" statements and logical operations that could simulate intelligence, but only for scenarios that programmers had explicitly anticipated.

Machine learning provides systems with the ability to instead learn patterns, make predictions, and improve from experience by analyzing vast amounts of data. This "bottom-up" philosophy – where intelligence emerges from data rather than being hard-coded – is the bedrock upon which nearly all of today's significant AI advancements are built. Large language models are a product of applying a specific subfield of machine learning, known as deep learning, to the domain of natural language. Their story is a specific thread in the rich and interconnected history of AI research.

Early Foundations: From Explicit Rules to Learned Representations

The journey to create systems that can process and reason about information began with a fundamentally different philosophy than the one that powers AI today. The earliest attempts were rooted in a "top-down" approach, meticulously hand-crafting intelligence through logic. However, the inherent limitations of this method led to the single most important paradigm shift in AI history: the move from programming explicit rules to enabling systems to learn from data.

The Promise and Brittleness of Symbolic AI

From the 1950s through the 1980s, the dominant approach to AI was Symbolic AI, often called "Good Old-Fashioned AI" (GOFAI). The field was officially founded at the 1956 Dartmouth Workshop, where the term "artificial intelligence" was coined. Researchers believed that intelligence could be replicated by programming a computer with a vast, formal system of logical rules and symbols. This method produced remarkable early successes in closed, structured environments. Programs like the Logic Theorist could prove mathematical theorems, and early chatbots like ELIZA could simulate human conversation using clever rules.

However, this rule-based approach proved brittle when faced with the messy, ambiguous nature of the real world. A symbolic system designed to understand language would require a nearly infinite set of rules to account for every nuance, exception, and context. This brittleness was a key factor leading to the first "AI Winter" in the 1970s, as the initial promise of symbolic systems failed to scale to solve complex, real-world problems.

The Paradigm Shift to Data-Driven Learning

By the late 1980s, a "bottom-up," data-driven philosophy began to gain momentum, embodied by artificial neural networks. Instead of telling a computer how to recognize a cat with thousands of explicit rules, researchers could show a network a vast dataset of labeled images – some containing cats, and many others not – allowing it to learn the distinguishing patterns on its own.

This approach was powerful but also exceptionally narrow. A network trained this way could become highly accurate at its specific task, but its entire "knowledge" was limited to a single binary question: "cat" or "not a cat." It could not identify a dog, a car, or a building; it could only classify them as falling into the "not a cat" category. This specificity was a core limitation of early machine learning.

Building the Data Foundation for Modern AI

This paradigm shift introduced a new, critical prerequisite for success in artificial intelligence: data. The effectiveness of a machine learning model is directly tied to the quality and quantity of the data it is trained on. This realization transformed AI from a challenge of pure logic programming into a complex engineering problem centered on data infrastructure. Building successful, scalable AI solutions now required a robust data foundation – the ability to collect, store, manage, and process massive datasets efficiently. This strategic imperative created the conditions for the rise of modern cloud computing and data management services, which now form the essential backbone for any serious AI initiative.

Word Embeddings: The First Step to Understanding Meaning

The first true breakthrough in applying data-driven learning to the complexities of human language came with the development of word embeddings in the early 2010s. This technique taught models to represent words as numerical vectors in a multi-dimensional space. The position of each vector was determined by the contexts in which the word appeared in a massive body of text, meaning words used in similar ways ended up close to each other in this space.

This method allowed a model to learn abstract semantic relationships directly from text, without any human-programmed rules. The most famous example of this is the analogy vector(′king′) − vector(′man′) + vector(′woman′) ≈ vector(′queen′). The model, by analyzing statistical patterns, learned the concepts of royalty and gender as directions within its vector space. For the first time, a machine could capture the rich meaning embedded in language, providing the essential mathematical foundation for all modern language models.

Solving the Sequence Problem for Practical Applications

Once AI systems could assign mathematical meaning to individual words, the next great challenge was understanding them in sequence. The order of words is paramount to meaning – "dog bites man" is fundamentally different from "man bites dog." This required a new type of neural network architecture, one designed specifically to process data where context and order are everything.

Recurrent Neural Networks Introduce Memory

The first major architecture designed for this task was the Recurrent Neural Network. The key innovation of an RNN is a "feedback loop" that creates an internal state, or "memory." As the network processes a sequence element by element, its calculations are influenced by the information from previous elements, allowing it to maintain a contextual understanding as it moves through the data. This ability to retain information from previous steps was a crucial advance for machine comprehension.

Overcoming the Long-Range Memory Bottleneck

Despite their ingenuity, simple RNNs suffered from a critical flaw that limited their practical application, especially on longer sequences of text like documents or extended conversations.

The Vanishing Gradient Problem

During training, neural networks learn by a process called backpropagation. After a network makes a prediction, it compares its output to the correct answer to calculate an "error signal." This signal is then sent backward through the network's layers to make small adjustments. Think of it as a manager providing feedback on a multi-stage project; the feedback starts with the final outcome and is used to correct the actions at each preceding stage.

In an RNN, this error signal grew progressively weaker as it was propagated back through many steps of the sequence. This mathematical reality, known as the vanishing gradient problem, meant the network struggled to learn from words at the beginning of a sentence by the time it reached the end. It effectively "forgot" long-range context.

The Power of LSTM Networks

The solution, developed between 1995 and 1997 by Sepp Hochreiter and Jürgen Schmidhuber, came in the form of Long Short-Term Memory networks. LSTMs were a more sophisticated type of RNN that introduced a "gating mechanism" – a set of internal controls that allowed the network to learn what information to store in its memory, what to forget, and what to pass along. This elegant solution made LSTMs far more effective at capturing long-range dependencies and, for many years, they became the state-of-the-art architecture for NLP tasks, powering significant improvements in speech recognition and predictive text generation.

The Attention Mechanism Enables Dynamic Focus

Even LSTMs had limitations. They still processed text sequentially, which was computationally intensive. The true breakthrough was the attention mechanism. Instead of trying to compress an entire input sequence into a single, static memory, attention allows a model to look back over the entire input at every step of generating an output. It calculates a weighted "attention score" for each word in the input, determining how much influence each part of the input should have on the current output word. This ability to dynamically focus on the most relevant information, regardless of its position in the sequence, provided a robust solution to the long-range dependency problem and set the stage for the next great architectural leap.

The Transformer Architecture Ignites a Silent Revolution

The attention mechanism was a powerful addition to existing sequential models, but in 2017, a landmark paper from Google researchers titled "Attention Is All You Need" proposed something far more radical. It introduced the Transformer architecture, a model that discarded sequential processing entirely and relied exclusively on attention. This innovation would become the foundational technology for virtually every modern language model. Indeed, the acronym GPT – used in the model family that includes ChatGPT – stands for Generative Pre-trained Transformer, highlighting the central role of this architecture.

Self-Attention and Massive Parallelization

The core innovation of the Transformer is a mechanism called self-attention, which allows every word in a sentence to look at and weigh its relationship with every other word in that same sentence simultaneously. This creates a far richer and more nuanced contextual understanding of the text.

While self-attention provided a more sophisticated method for understanding language, the most significant practical breakthrough was massive parallelization. Because RNNs and LSTMs processed text one word at a time, they were a poor fit for modern hardware like graphics cards. Because these components excel at handling thousands of parallel computations at once, they were perfect for the Transformer's architecture. This computational efficiency unlocked by GPUs made it feasible to train models on vastly larger datasets and with billions of parameters – the internal variables, or weights, that a network learns during training and which effectively encodes its knowledge.

From Research to Reality: BERT Transforms Google Search

The impact of the Transformer was immediate and profound. In 2018, Google introduced BERT (Bidirectional Encoder Representations from Transformers), a model that used the Transformer's ability to see the full context of a word by looking at the words that come before and after it simultaneously.

In October 2019, Google integrated BERT directly into its search engine, calling it the biggest leap forward in five years. This "silent revolution" allowed Google to understand the intent and nuance behind longer, more conversational queries. For example, a search for "2019 brazil traveler to usa need a visa" previously returned results for U.S. citizens traveling to Brazil. BERT correctly understood the importance of the word "to," grasping that the user was a Brazilian traveler wanting to go to the USA, and delivered the correct results about visa requirements for that specific journey.

A General-Purpose Architecture for Custom Solutions

Beyond its performance, the Transformer's success lay in its versatility. Unlike previous architectures designed for specific tasks, the Transformer proved to be a flexible, general-purpose framework suitable for an incredible range of language challenges, from translation and summarization to question answering. This adaptability made it the ideal foundation for building the kind of custom solutions needed to solve unique and complex business problems, moving beyond off-the-shelf tools to create genuine competitive advantages.

Scaling Laws Reveal a Predictable Path to New Capabilities

The invention of the Transformer provided the architectural vehicle for progress, but it didn't by itself explain the incredible leap in capabilities that was to come. The next crucial ingredient was a simple but powerful discovery that would define the strategic direction of AI development for years: performance improves predictably with scale.

The Predictable Power of Scaling Laws

In the years following the Transformer's invention, researchers began to observe a remarkably consistent pattern. They found that a model's performance on language tasks improved in a smooth, predictable way as three key factors were increased.

More Data, More Parameters, More Compute

The relationship, which became known as scaling laws, was clear. Model capabilities improved reliably as you scaled up:

- Data: Training datasets grew from curated collections to vast portions of the public internet, like the Common Crawl dataset, providing models with a broad foundation of human knowledge.

- Parameters: The number of internal variables that encode the model's knowledge grew from millions into the hundreds of billions.

- Compute: Training these models required enormous computational power, involving massive clusters of GPUs running for weeks or months at a time, representing a multi-million dollar investment for a single training run.

To process this information, models break text down into tokens, which are common pieces of words or punctuation. The amount of tokenized text a model can handle at one time – including both the user's prompt and its own response – is known as its context window. A larger context window allows a model to "remember" more of a conversation or document, which is critical for complex tasks.

A Strategic Roadmap for AI Investment

This was more than an academic observation; it provided a strategic roadmap. It suggested that the most reliable path to more capable AI was not just to invent new algorithms, but to invest heavily in the data and computation required to make existing architectures orders of magnitude larger. This insight turned cutting-edge AI research into a massive engineering and capital allocation challenge.

The GPT Series and the Dawn of Emergent Abilities

The evolution of OpenAI's GPT series is the clearest illustration of this scaling philosophy in action. Each new model wasn't a radical redesign but a massive scale-up, unlocking surprising new abilities at each step.

From GPT-1's Coherence to GPT-3's In-Context Learning

GPT-1 (2018) proved the concept of the generative Transformer. Its successor, GPT-2 (2019), scaled up tenfold to 1.5 billion parameters and demonstrated zero-shot learning – the ability to perform tasks it wasn't specifically fine-tuned for.

The next leap was astronomical. GPT-3 (2020), with 175 billion parameters, marked a paradigm shift. At this immense scale, the model began to exhibit emergent abilities – capabilities not present in smaller models. The most significant of these was in-context learning, where the model could learn a new task from just a few examples provided within the prompt itself. It could write functional code, solve logic puzzles, and generate prose that could appear remarkably human-like to a casual reader. This progression proved the scaling hypothesis: scale wasn't just making the models better; it was fundamentally changing what they could do.

The Final Catalyst: From Raw Power to a Usable Product

By late 2022, the underlying technology for a powerful AI was in place, but it remained largely a tool for researchers and developers. GPT-3 was brilliant but often unruly, requiring careful prompting to produce helpful responses. The final step in its journey was not about making the model bigger, but making it accessible, safe, and useful. This was the crucial transformation from a powerful engine into a polished product.

The Interface: Unlocking AI for Everyone

Arguably the most profound innovation of ChatGPT was not its algorithm, but its user interface. For the first time, a state-of-the-art language model was offered to the public through a simple, intuitive chat window – free and instantly available in a web browser. This design choice democratized access on an unprecedented scale. Millions of people who had never written a line of code could now interact with advanced AI through natural conversation, removing the barriers of APIs and complex prompt engineering.

The Alignment: Fine-Tuning with Human Feedback

The second breakthrough was a technique designed to make the model's behavior align more closely with human values and intentions. The process is known as Reinforcement Learning from Human Feedback. To implement RLHF, human labelers ranked different model outputs to teach a "reward model" what constitutes a good or bad answer. The language model was then fine-tuned using this reward model as a guide, learning to prioritize responses that humans would find helpful, harmless, and honest. This alignment process, built on OpenAI's InstructGPT research, was the secret sauce that made ChatGPT feel so much more coherent and cooperative compared to its raw predecessors.

Operationalizing a Model for a Global Audience

Launching ChatGPT was not just a technical experiment; it was a massive operational challenge. Making a large-scale model available to the public required an immense and scalable infrastructure capable of handling unpredictable, viral growth. The system had to serve millions of simultaneous queries while maintaining performance, a task that goes far beyond simply training the model. This step from a research artifact to a live, robust, global product represents the complex operationalization and MLOps challenges that are central to delivering real-world AI value.

The Tipping Point for Mass Adoption

The combination of these factors – raw capability derived from scale, a frictionless user interface, and carefully aligned behavior – created the perfect storm for mass adoption. ChatGPT reached one million users in just five days and 100 million in two months, becoming the fastest-growing application in history. This launch sent a shockwave through the industry, prompting a "code red" at Google and accelerating a multi-billion dollar investment from Microsoft into OpenAI. This was the tipping point where advanced AI moved from corporate research labs and developer APIs into the mainstream cultural and business consciousness, kicking off a global AI boom – an explosion of investment and innovation whose repercussions continue to shape the technological landscape.

A Decades-Long Journey to an Overnight Success

The launch of ChatGPT was widely seen as an "overnight success," a sudden technological leap that appeared to come from nowhere. But as this history shows, it was the brilliant culmination of a long, deliberate, and cumulative journey. It stands on the shoulders of decades of research, representing the tipping point of an era, not the start of one.

A Clear Technological Lineage

The path that led to the current moment reveals a clear and logical progression of innovation, with each breakthrough building directly on the last. The limits of Symbolic AI created the need for data-driven Machine Learning. Word Embeddings gave language a mathematical form. RNNs and LSTMs solved the problem of processing sequences, which was then perfected by the Attention Mechanism. The Transformer architecture leveraged attention to unlock massive parallelization and scale. Scaling Laws provided a predictable roadmap for making models more powerful, and finally, RLHF and an accessible interface turned that raw power into a usable product. This chain of compounding innovation is the true story behind the recent AI revolution.

The Revolution Was Accessibility, Not Invention

While the underlying technology is revolutionary, the social and business explosion of late 2022 was primarily driven by accessibility. Powerful AI, in the form of the Transformer architecture, had already been creating immense value for years, silently improving Google Search for billions of users. The true catalyst for the boom was not the invention of a new technology, but the packaging of that existing, powerful technology into a product that anyone could use, understand, and experiment with. The revolution was the moment AI was placed directly into the hands of the public.

Applying Historical Lessons to Your AI Strategy

Understanding this history provides a powerful framework for navigating the current AI landscape. It shows that major breakthroughs are not random; they are solutions to fundamental problems, built upon a stable foundation. This pattern is continuing today. For example, the next strategic frontier is multimodality – the convergence of different branches of AI. Systems are now emerging that can understand not just text, but also images, audio, and video, connecting the lineage of Natural Language Processing with the parallel history of Computer Vision.

For businesses, this opens up new possibilities for creating far richer and more context-aware solutions. Recognizing these patterns is key to developing a durable AI strategy that looks beyond the current hype cycle.

Why an Experienced Partner Matters

The history of artificial intelligence teaches a clear lesson: there is no single magic bullet. True business value comes from strategically applying the right solution from a diverse technological landscape – whether that's a computer vision model, a classic machine learning algorithm, or a large language model – to the right problem. This is a philosophy we at Gauss Algorithmic have honed through years of hands-on work, long before the recent generative AI boom captured the public's imagination.

Our expertise is not just in building models, but in the entire lifecycle that makes them successful: from the initial discovery, through the strategic foresight to identify the most impactful use cases and the foundational engineering of robust data pipelines, to the disciplined practice of operationalizing AI so that a promising prototype becomes a reliable, integrated part of your business. Partnering with a team that has this deep, historical perspective allows you to move beyond the hype and build solutions that are not only innovative but fundamentally sound.